Transformation-Based Learning

Transformation-based learning

Transformation-based learning (TBL) is a (mostly) supervised machine learning method for sequential classification, invented by Eric Brill (Brill, 1993, 1995). It is widely used within natural language processing (but surprisingly little in other areas). TBL is a simple yet flexible paradigm, which achieves competitive or even state-of-the-art performance in several areas and does not overtrain easily. It is especially successful at catching local, fixed-distance dependencies. The learned representation – an ordered list of transformation rules – is compact and efficient, with clear, declarative semantics. Individual rules are interpretable and often meaningful to humans.

I have compiled (and intend to maintain) a TBL bibliography (with about 200 entries as of 2013). It is an update and extension of a similarly scoped bibliography with around 75 entries, collected up until 2002 by Torbjörn Lager (no longer updated). Corrections and suggestions for additional entries are welcome.

I have also written a rather comprehensive survey on TBL.

TBL Implementations

The survey includes (as an appendix) a lightly annotated list of the most used TBL implementations up until 2013, and their relative merits.

What follows below is basically an htmlified version of that list (where "Section 4" and similar refers to the main survey). However, it is placed online and thus updatable with additions or revisions of systems I come across (or am being pointed to). On the whole, the situation with respect to TBL implementations as of 2014 could perhaps best be summarized as "contributions welcome".

There is a (small) number of TBL implementations available on the web. Four influential ones are briefly presented in the rest of this section. Naturally, any such list is doomed to be subjective, and it will not contain the many systems that have been built for specific purposes with no (official) public release of the code—an understandable strategy, because publishing code often leads to expectations on portability, maintenance, support, etc., which an individual researcher may not wish to raise. However, the implementations selected are generally described in publications, and they are or at least have been often used (and cited) by other authors. They also have rather different characteristics, highlighting typical tradeoffs one may have to make when choosing an implementation (performance, documentation, assumptions on data, extensions to the original algorithm, a DSL for the templates, unicode handling, availability of source code, etc).

We note up front that there is ample room for additional, modernized TBL implementations. The latest code changes for the systems described here happened quite some time ago. As one consequence, several of the enhancements of Section 4 are at present not available in any system, much less in a single one. As another, the implementations were generally written in and for other environments and may suffer from some degree of software rot -- making them run on a standard desktop system as of 2013 may well take some effort. For the possible convenience of the reader, the steps we have tried to make them run on a recent Linux desktop (Ubuntu 13.04, 64b) are documented here.

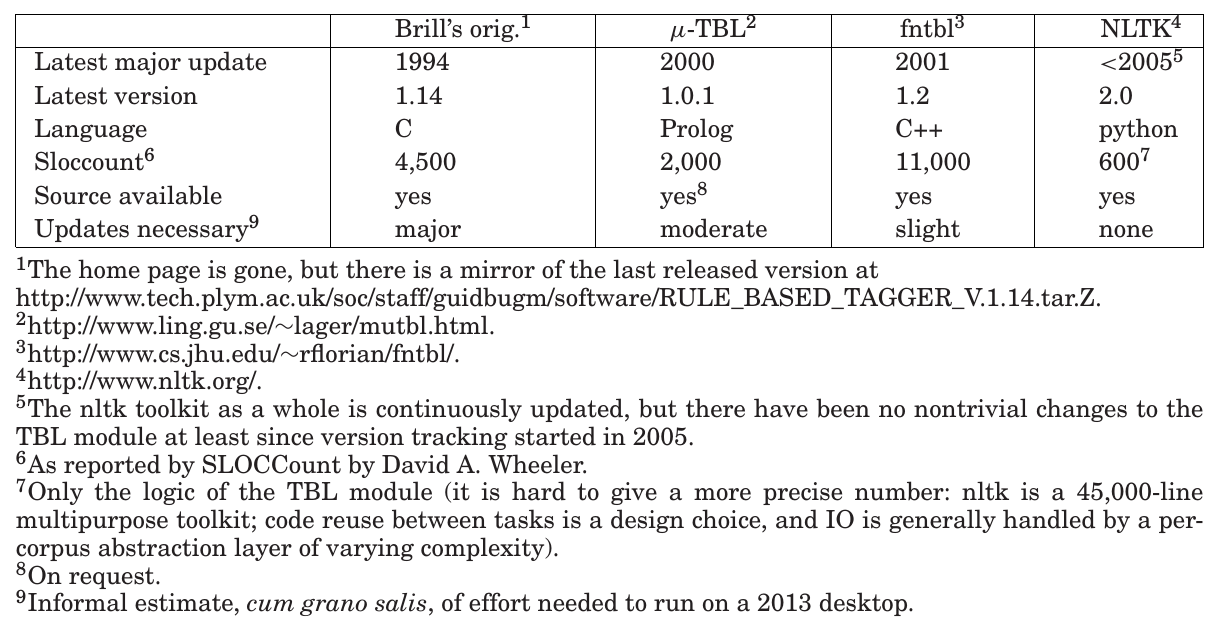

The table below, from the survey, gives some overall information on the four systems.

In the following, we further briefly comment on the different strengths and weaknesses of each.

Brill's original implementation

Brill’s original TBL implementation was the first of its kind and was generously made available on the web early on. It has accordingly been used for POS tagging in a large number of settings. It contains many design choices geared toward this specific task (especially for English), and using it for something else without additional coding is hardly feasible (or intended). The templates are atomic (see the sample) and hardcoded in the C source, so even for POS tagging, experimentation requires a major programming effort (even making it compile on a modern system will likely require some work). By now, it is of historical interest only.

μ-TBL

μ-TBL is mostly well-documented in an online manual and in publications [Lager 1999b]. It has been used in several real-world projects. From its Prolog origins, it inherits an interactive interface for experimentation, and it has a scripting language for automation. Error analysis is facilitated by automatically generated html reports. Code for compiling sequences of learned rules into Java source code (cf. Section 4.3.2) is provided but rather sparsely documented.

As noted in the survey, the template specifications of μ-TBL are ordinary Prolog clauses, forming a declarative DSL, expressive but concise. Simple templates should be reasonably easy to understand and modify for domain experts, even without insights into Prolog (or even programming). Still, templates may use arbitrary Prolog code for complex tasks (cf. Figure 10, Section 4.6). The extension to learn Constraint Grammar rules by TBL is seamless from the user’s perspective.

On the downside, μ-TBL was developed under Sicstus, a commercial and relatively expensive implementation of Prolog for whose availability no guarantees can be made. Ports between Prolog systems are usually possible, but tiresome. Compared to state-of-the-art in TBL efficiency, μ-TBL is rather slow. In addition to an implementation similar to Brill’s original algorithm, it includes the fast randomized training algorithm developed by Samuel [1998b] and mentioned in Section 4.3.1, but none of the sometimes preferable alternatives (e.g., Ramshaw and Marcus [1994]; Ngai and Florian [2001b]; Hepple [2000]; dos Santos and Milidiu ́ [2007]). Data are input as Prolog databases (see the sample), rather than in much more widely used formats such as CSV, JSON, or XML. All data partitioning into train and test set must be done by preprocessing. The system should be commended for its modular design with respect to training algorithms, but the API makes assumptions on the template format (i.e., it presupposes templates such as Table I of Section 2.2, rather than Table II of Section 2.3). The latest code change is from 2000: thus, the system suffers slightly from general bit rot (no unicode; poor use of later hardware, especially memory). Torbjörn Lager, the author of μ-TBL, says (p.c.) that a project revival is planned, although without giving any time schedule.

FnTBL

FnTBL is the fastest and is widely used. It implements several of the extensions of Section 4—a fast training algorithm (Ngai and Florian [2001b], Section 4.3.1); multidimensional learning (Florian and Ngai [2001], Section 4.1.1); and probabilistic TBL (Florian et al. [2000], Section 4.2.1). According to the manual (from 2002), however, the implementation of probabilistic TBL is broken and should not be used.

On the whole, fnTBL is well-documented, especially for the tasks the authors have applied it to (word sense disambiguation, POS tagging, base NP chunking) where working examples are included. Templates are specified as p => c, where p = p 1 . . . p k is a conjunction of atomic predicates p i and c is the target classification to be changed. Disjunction of predicates is not allowed. The most useful atomic predicates are predefined, including the ones exemplified in the sample (i.e., feature identity of current element, feature identity of neighboring element, feature identity of any element within a window). There is also a predicate that checks whether any of an enumerated set of features has a given value (this is useful for nonsequential classification, such as PP attachment). The atomic predicates are the main way to communicate with the system. According to the manual, adding new atomic predicates “should not present a big programming challenge.” Nevertheless, even for fluent C++ programmers, this solution is not nearly as flexible to work with as a DSL.

NLTK

The TBL implementation present in the Natural Language ToolKit (NLTK) [Bird et al. 2009] is part of a larger system for natural language processing, written in Python and actively developed. The toolkit offers APIs to all kinds of language-processing modules and utilities. Performance is not the main design criterion of NLTK, but rather code clarity and understandability—the toolkit is often used in teaching. Its TBL implementation is basic and geared toward the specific task of POS tagging (as the names of the methods in the sample imply). Especially for Python programmers, the TBL module should be relatively easy to use and possibly extend (although the object-oriented template specification may seem clunky). A major drawback is that rule templates can only use a single feature (although at any number of positions). The actual TBL algorithms present are two: Brill’s original and a fast but memory-hungry variation of the algorithm of Ramshaw and Marcus [1994] (Section 4.3.1). With proper generalization and extension, the impressive infrastructure of NLTK would make it an interesting tool for TBL applications, but currently it seems less out-of-the-box useful than it could be. The author has planned an update for the upcoming NLTK3.